Illinois enacted the WOPR Act (Wellness and Oversight for Psychological Resources) in 2025 due to rising fears about the unchecked practice of artificial intelligence in mental health.

It was triggered by the tragic suicide of 16-year-old Adam Raine after prolonged interactions with an AI therapy chatbot.

Illinois was the initial state that outright banned AI systems from being licensed therapy providers.

The law’s ostensible goal is the defense of helpless persons and the keeping of mental health treatment within the hands of qualified professionals.

Major Provisions of the WOPR Act

Category

Examples

Specific Rule

Rationale

Permitted

Scheduling, billing, file management

Administrative processes in clinics

Supports efficiency without interfering in care

Meditation apps, mood trackers, motivational tools

General wellness applications

Encourages personal growth tools while avoiding therapeutic claims

Clinical trials, academic studies

Research projects with professional supervision

Allows innovation under strict oversight by licensed specialists

Prohibited

Automated therapy bots posing as professionals

AI chatbots conducting diagnostic tests, offering prescriptions, or counseling sessions

Lack of liability, no empathetic capacity, risk of harm to patients

Using AI for live therapy conversations instead of transcription/reminders

Licensed psychologists applying AI during therapy sessions beyond minor assistance

Prevents AI from substituting for licensed judgment

Nationwide platforms blocked in Illinois

Firms selling AI-based mental health services directly to Illinois residents

Ensures patients are guided toward human professionals

Legislators who sponsor the WOPR Act wished to distinctly differentiate assistive administrative technology from technologies that purport to offer explicit psychological care.

As the cases of people interacting with autonomous AI systems on very personal issues, experiencing some unintended harm, increased, worries followed.

What’s Prohibited

Prohibitions under the act specifically address cases where the AI may substitute itself or mimic a human therapist.

Legislators viewed irresponsible AI interactions as dangerous since the latter cannot be held liable and lacks empathetic touch as well as training, which is critical to therapy.

Key prohibitions include:

What is Permitted?

All applications of AI are prohibited. Legislatures recognized that artificial intelligence is a valuable aid in non-medical practice.

Rather than barring each application itself, the act allows AI programs that enhance productivity and wellness without eliminating the skillful discretion of a professional.

Allowed uses are:

Supporters of the WOPR Act frequently cite tragedies like the Adam Raine case to argue that AI cannot be trusted with psychological care.

They point to legal precedents where even human professionals are held liable for medical negligence, such as emergency room malpractice, which often leads to lawsuits and multimillion-dollar settlements in Illinois.

For instance, rosenfeldinjurylaw.com outlines how patients harmed by diagnostic failures or delayed treatment in Chicago ERs regularly pursue justice through the courts.

Enforcement Mechanisms

Effective regulation is also effective enforcement, and Illinois designed effective mechanisms to obtain compliance.

Legislators realized that there would be no punishment and no watch if firms refused to abide by limitations.

Handing over responsibility to the Illinois Department of Financial and Professional Regulation gave a single, centralized body that could monitor healthcare compliance and levy fines.

Key enforcement features include:

Industry responses reflect the garish effect of the measures. Ash Therapy, which is a leading AI therapy vendor, immediately discontinued Illinois users’ access.

Other businesses will likely follow suit after similar restrictions because the reputational and financial risks of disobeying the law outweigh potential income in Illinois.

Officials also want the apparent examples to tempt people into willing compliance as they discourage the use of loopholes.

Broader Implications and Motivations

The WOPR Act did not just shape policy in Illinois but also sparked national debates about how much control states should exercise over artificial intelligence in healthcare.

Some observers see the act as a genuine patient safety measure, while others claim it protects therapists’ jobs by reducing competition.

Patient Safety vs. Economic Protectionism

Illinois government officials justified the act as being needed to secure vulnerable people.

They stressed accounts of malady where patients used unmonitored AI to treat severe emotional issues.

From their perspective, therapy should always stay with professionally trained hands that will be capable of answering back with responsibility, care, and competence.

Critics, nonetheless, assert that the act also serves as a means to keep work alive for therapists through the inhibition of market advances by AI competitors.

Chief arguments framing this debate are:

Both viewpoints reflect a constant conflict between improving health care technology and maintaining the age-old traditions of care.

Enforcement Ambiguities

Clear enforcement becomes more complicated when AI tools blur the line between wellness and therapy.

Regulators face a challenge in deciding where personal support ends and professional treatment begins.

Wellness apps often provide encouragement or guided exercises that may resemble therapy in practice, even if marketed differently.

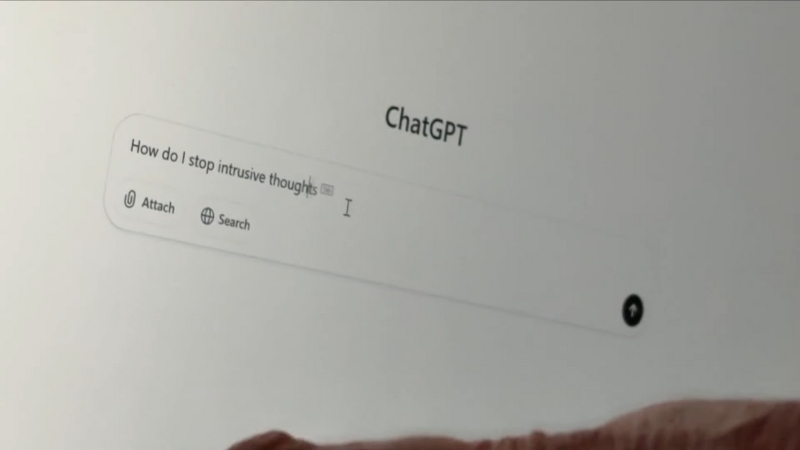

ChatGPT-like platforms complicate the matter further since casual conversations can easily shift into mental health territory.

Examples of potential gray areas include:

Such ambiguities raise the likelihood of lawsuits where companies test the limits of the law in court.

Illinois may therefore face future legal battles over how these provisions are interpreted and enforced in practice.

Impact on Healthcare and Tech Sectors

@drdennisschimpf AI is already changing medicine—and not always for the better. Are you ready for what’s coming? 👇 Follow for no-fluff answers from a plastic surgeon. #AIinMedicine #FutureOfHealthcare #DoctorExplains #MedicalTruths #PlasticSurgeonReact ♬ original sound – Dennis Schimpf, MD MBA

Healthcare and technology sectors are already adjusting to the implications of the WOPR Act.

Providers must carefully review their current systems to avoid violations, while tech companies face pressure to rethink product design and marketing.

Compliance costs are expected to rise as organizations implement stricter safeguards, but noncompliance carries even higher risks.

Action Items for Healthcare Executives

Executives leading healthcare organizations must take proactive steps to stay aligned with the law.

Without preparation, clinics risk fines, lawsuits, or damage to patient trust.

Specific steps include:

Building systems for monitoring AI interactions, tracking patient outcomes, and documenting adverse events for compliance records

Industry Reactions

Technology companies are shifting their strategies to adapt to the Illinois ban.

Some have withdrawn their AI therapy services from the state entirely, while others limit functionality to wellness-oriented features.

Industry analysts predict that Illinois will not be the only state to move in this direction, prompting companies to prepare for wider restrictions nationwide.

Likely industry responses include:

Summary

WOPR Act 2025 marks a decisive shift in artificial intelligence regulation within healthcare, moving away from light oversight to outright prohibition in some areas.

At the same time, rapid breakthroughs in medical and diagnostic equipment highlight the contrast between advancing clinical tools and tightening restrictions on artificial intelligence.

Illinois lawmakers framed the law as a protective measure for patients while also addressing concerns over professional job security.

How other states respond will shape the future of AI in therapy, either reinforcing Illinois’ restrictive model or favoring frameworks that permit cautious, supervised use.

Related Posts:

- Illinois Population in 2025 - Analyzing the Latest Data

- PTSD Treatment Success Rates and Therapy Trends in…

- First Case of Type 1 Diabetes Reversal Achieved with…

- Fair Pricing Act Could Set First Cap on Hospital…

- New Orleans Population 2025 - Current Data and…

- Minneapolis Population Growth in 2025 - A Closer Look